Generative AI technologies powered by Large Language Models (LLMs), such as OpenAI’s ChatGPT, have shown themselves to be both a big boon to productivity as well as a concerningly confident purveyor of incorrect information. At Mozilla, we’re excited about the potential role generative AI can play in creating new value for people while demonstrating leadership in responsible and ethical implementation approaches.

One domain where we see high value is in training LLMs on reference documentation, enabling developers to more quickly find solutions or get answers about the purpose or behavior of code snippets. MDN’s mission is to provide a blueprint for a better internet and empower a new generation of developers and content creators to build it. We see a path forward to “overlay” useful AI-driven helpers on top of MDN’s canonical web dev documentation to aid developers in new ways. Of course, human-authored canonical documentation will always be available and clearly called out as such. To this end, last week, MDN launched AI integrations with its body of web developer reference documentation, manifesting in two features that have been in development over the last few months: AI Help and AI Explain, both powered by GPT-3.5.

AI Help enables MDN readers to ask questions with a conversational interface, and the tool offers concise answers with MDN articles related to their questions for contextual help. AI Help is limited to offering information only based on MDN content, and is now in beta and available to logged-in MDN readers.

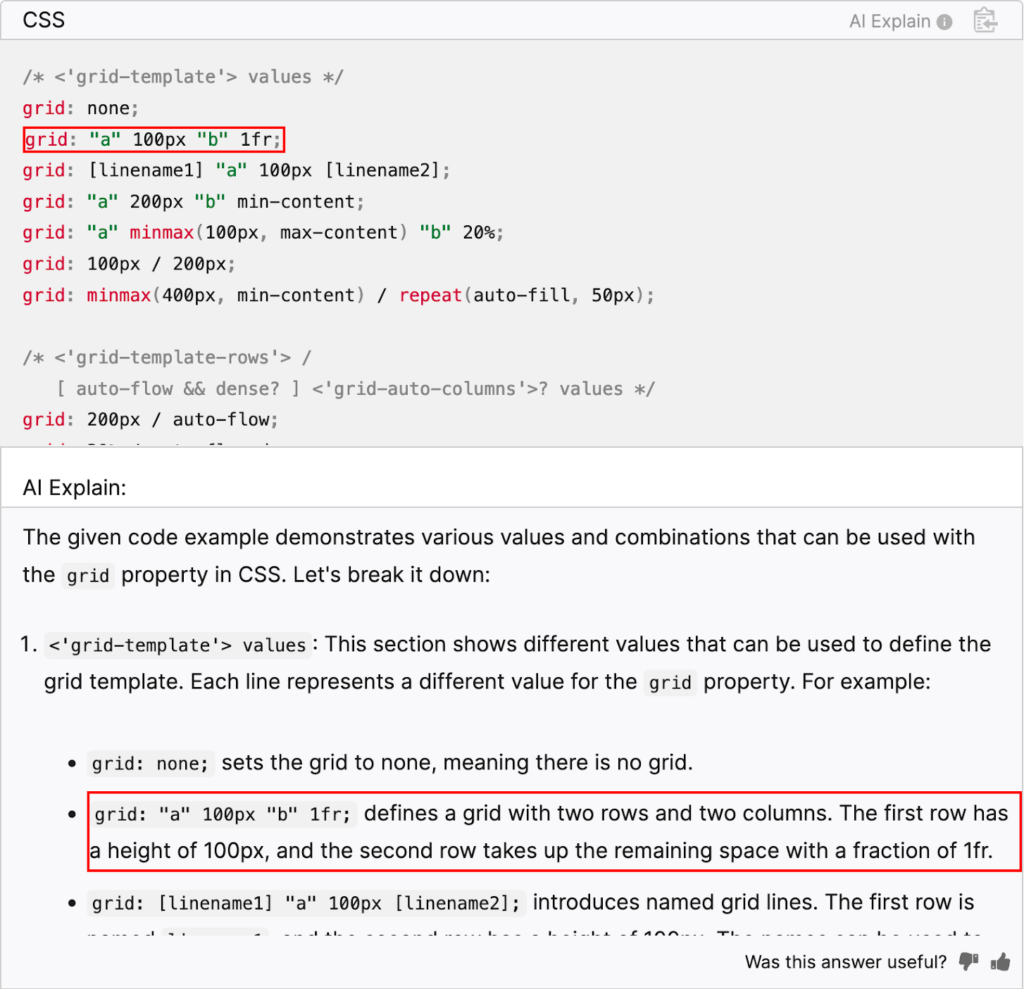

AI Explain enables readers to explore and understand code blocks and parts of code examples embedded in MDN documentation pages, describing the underlying purpose and behavior of the code. AI Explain is still an experimental tool.

Our internal work on these tools has us hugely optimistic about their potential to save developers tons of time as they seek answers and learning resources. Early-stage developers appear to benefit most, as they are least likely to know exactly where to go or which keywords to search for to find the answers they seek. As we’re still in the early stages, we’re also seeing instances where these AI tools provide incorrect information in response to a query. The MDN team is working to identify these cases and develop fixes so that we can continuously raise the quality and utility of the answers provided by AI Help and AI Explain. We are also planning to make it easier for people to flag bad answers, creating issues sent to the team to investigate. In the end, our goal is to make MDN more accessible and useful to a wider variety of developers, without diminishing MDN’s role as the canonical reference source for high-quality information about developing for the web.

Feedback Matters

With the launches of AI Help and AI Explain last week, we received a wide range of feedback from readers, from delight to constructive criticisms to concerns about the technical accuracy of the responses. We’re only a handful of days into the journey, but the data so far seems to indicate a sense of skepticism towards AI and LLMs in general, while those who have tried the features to find answers tend to be happy with the results.

For AI Help in particular, the feedback indicates that the majority of people who used this feature and voted consider the answers to be helpful. Please do try AI Help and give us your feedback so that we can enhance this service based on how it works for you!

In the case of AI Explain, the pattern of feedback we received was similar, but readers also pointed out a handful of concrete cases where an incorrect answer was rendered. This feedback is enormously helpful, and the MDN team is now investigating these bug reports. We’ve elected to be cautious in our approach and have temporarily removed the AI Explain tool from MDN until we’ve completed our investigation and have high-quality remediations in place for the issues that have been observed.

Here is an example of an incorrect answer: the AI indicates that the code defines a grid with two rows and two columns, where it actually only defines two rows, giving an incorrect answer.

AI Assistants: A Helpful Complement

One of the user experience challenges LLMs present is how to set customer expectations. As useful and efficient as it is to use LLMs to interact with reference documentation, even extraordinarily well-trained LLMs — like humans — will sometimes be wrong. We see this as an emerging challenge in the field of human-computer interaction that goes well beyond MDN’s limited use case: What should people do when chat-based systems render answers in good faith that are merely likely to be correct? One approach to responsible systems design could be to provide people with better ways to check answers and build confidence in their veracity. This is a field we’re excited to participate in.

Speaking of the human element, LLMs also have the useful attributes of having zero ego and infinite patience. LLMs don’t mind answering the same question over and over again, and they feel no compulsion to gate-keep for online communities. It’s sadly not uncommon today for learners to face the discouraging experience of asking technical questions in online forums only to be dismissed or shamed for their “dumb question.” When done well, there is a clear opportunity to employ AI-based tools to improve the pace of learning as well as inclusivity for learners.

The important question, and the core of this venture, is whether these AI assistants can improve our work and user experience. Do they help our users find information faster? Do they simplify complex concepts for them? Do they support Mozilla’s values and mission? If the answer is ‘yes’ to these questions, then they are fulfilling their purpose. We will work to convey this perspective clearly to everyone who interacts with our AI features.

The MDN Community

MDN has a long history as a human-authored and curated source of knowledge, so we know that AI integration in MDN will be a sensitive topic for some. While many are excited about the possibilities of generative AI, others might prefer that MDN stay how it is. That’s fine. We are a community, and differences of opinion are normal and healthy. MDN’s strength lies in our community. We do see a path forward that preserves the human-authored goodness while also providing tools that offer additional value over that amazing body of content. We need your input, criticism, kudos, and experience to ensure we’re employing AI in the most useful and responsible ways. Your feedback is critical to this process, and we will continue to take the feedback and adjust our plans in response to it. LLM technology remains relatively immature, so there will certainly be speed bumps along the way. This journey has just begun, and together, we’ll shape MDN’s future.

In the continued spirit of community and transparency, the MDN team will publish a postmortem blog in the coming days, that will include a breakdown of the feedback we’ve received and dive into some of the details of developing, launching, and pausing AI Explain.

The post Responsibly empowering developers with AI on MDN appeared first on The Mozilla Blog.

Original article written by Steve Teixeira >